EM in a nutshell

date

Aug 2, 2019

slug

em_algo

status

Published

summary

A quick rundown on expectation maximization

tags

Learning

ML

EM

type

Post

EM in a nutshell

The idea behind EM is summarized as follows:

- Normally, in a learning problem, we are given a dataset , we think of a model that capture the probability of the dataset , and the model is governed by a bunch of parameters .

- What we do in machine learning (typically), is to solve for by optimizing for the likelihood of the data, given by

- one example would be logistic regression for multi-class classification, where

- To maximize the likelihood of the data, we typically take the log of the likelihood and maximize it using

- close-form solution (if available and dataset is small enough), e.g., linear regression

- gradient descent (if close-form solution is not available or dataset is too large)

- However, there're often cases where the distribution can't be modeled so "simply", meaning there're more structure to the underlying distribution, where the distribution of is not only determined by , but also by another set of random variables , one such example would be mixed gaussian where

because there's a sum inside the product, when taking the log of the likelihood, the close-form solution becomes very complex and hard to directly optimize for

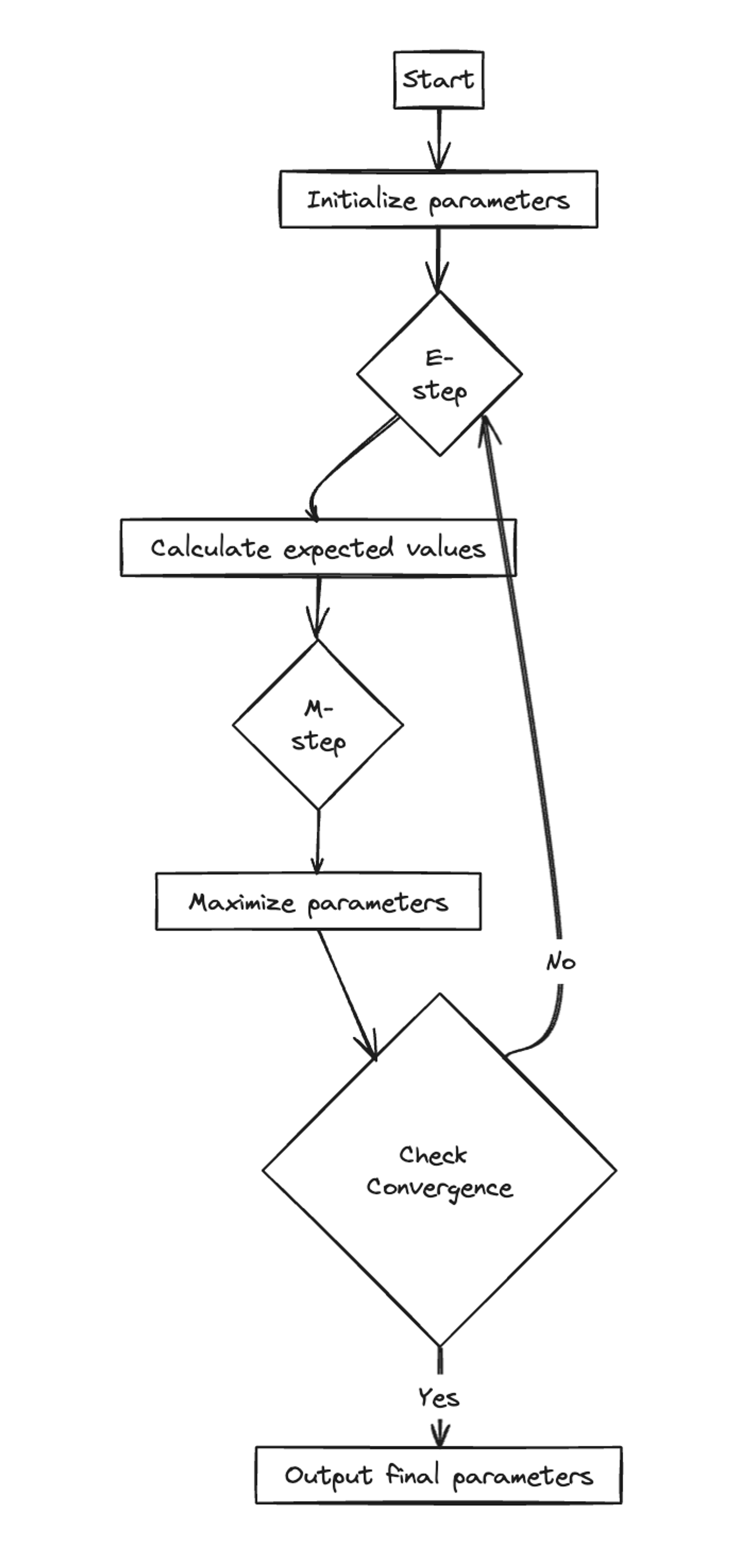

- Therefor, we optimize it through a two-step process

- Expectation Step: Fix to be , evaluate the posterior distribution of , i.e., because we know the form of the likelihood, we can calculate the posterior as long as a prior of is given.

- Maximization Step: Maximize the likelihood but use the posterior in place of the prior , i.e., maximize

- Repeat the above until convergence

- We can interpret the EM algorithm by considering a decomposition of the likelihood, i.e.,

where is an arbitrary prior of .

This decomposition tells us that for given a fixed , the best prior is found by letting it equal to the posterior , since it's the only way to make the KL divergence 0. Also, for a fixed , we can obtain the best by using maximal likelihood optimization.

Limitations

While the idea of EM is powerful, it is impractical in models where the evaluation of the posterior is impossible (think multi-layer deep neural network with nonlinearity in between).

To make inference about in those cases, we need to resort to another powerful tool (Variational Inference).